A rather disturbing situation recently surfaced involving popular podcaster and interviewer, Bobbi Althoff. It appears that a fake video, one that was sexually explicit, started to make its rounds across the internet, particularly on platforms like X, which many people still call Twitter. This kind of incident, honestly, raises quite a few eyebrows and causes a good bit of concern for anyone thinking about digital safety and personal privacy.

This particular fake video, it seems, was not something Bobbi Althoff herself created or was involved with in any real way. Instead, the available information suggests it was a deepfake, put together using artificial intelligence. This is, you know, a pretty serious matter because it means someone's image can be taken and used to create something totally untrue and potentially harmful, all without their permission or actual participation. It's a bit unsettling, to be honest.

The whole situation, really, just adds to ongoing discussions about the right and wrong ways to use technology, especially when it comes to things like AI. When a well-known person like Bobbi Althoff becomes a victim, it certainly brings these kinds of issues into sharper focus for everyone. It makes you think, basically, about how we protect people's images and reputations in a world where fake content can look so incredibly real, and spread so quickly.

- Movierulzvc Everything You Need To Know About This Popular Movie Streaming Site

- John Nettles A Deep Dive Into The Life And Career Of The Acclaimed Actor

- The Story Of Aubreigh Wyatt A Journey Of Courage And Resilience

- Noah Lalonde The Rising Star In The Digital Age

- Is Mike Wolfe Still Alive An Indepth Look At The American Picker

Table of Contents

- Who is Bobbi Althoff- A Quick Look

- What Exactly is This Bobbi Althoff Deepfake Porn Incident?

- How Do These Fake Videos Spread- The Bobbi Althoff Deepfake Porn Example

- What Are the Real-World Effects of Bobbi Althoff Deepfake Porn?

- Are There Any Rules About Bobbi Althoff Deepfake Porn?

- What Can People Do About Deepfakes Like Bobbi Althoff Deepfake Porn?

- Other Details from the Source Material

- Thinking About Digital Likeness and Bobbi Althoff Deepfake Porn

Who is Bobbi Althoff- A Quick Look

Bobbi Althoff, for those who might not know, is an American podcaster and someone who influences people online. She has, you know, become quite well-known for her interviews that have really caught on with a lot of folks. Her conversations with big names like Drake, Lil Yachty, and Offset, just to name a few, have really made waves. She hosts a show called "The Really Good Podcast," and it seems to be doing quite well, apparently.

She's a social media personality with, it says here, over 80 followers, and that's pretty interesting. She's also described as a person who is very good at asking questions, with quite a few weeks of experience talking to famous people. Her professional contact information is bobbialthoffteam@unitedtalent.com, which is, like, a pretty standard way for someone in her line of work to be reached. It gives you a sense of her professional standing, actually.

Here are some personal details about Bobbi Althoff, as given in the source material:

- Tia Kemp The Journey Of An Influential Figure In The Digital Age

- Davido Net Worth 2024 A Comprehensive Look At The Wealth Of The Nigerian Music Star

- Sophie Rain The Rising Star In Spiderman Erom

- Movieruiz The Ultimate Destination For Movie Enthusiasts

- Movierulz The Hub For Hollywood Action Movie Enthusiasts

| Born | July 31, 1997 |

| Occupation | Podcaster, Influencer |

| Known For | Viral interviews with celebrities (Drake, Lil Yachty, Offset), "The Really Good Podcast" |

| Social Media Following | Over 80 followers (as stated in source) |

| Professional Contact | bobbialthoffteam@unitedtalent.com |

What Exactly is This Bobbi Althoff Deepfake Porn Incident?

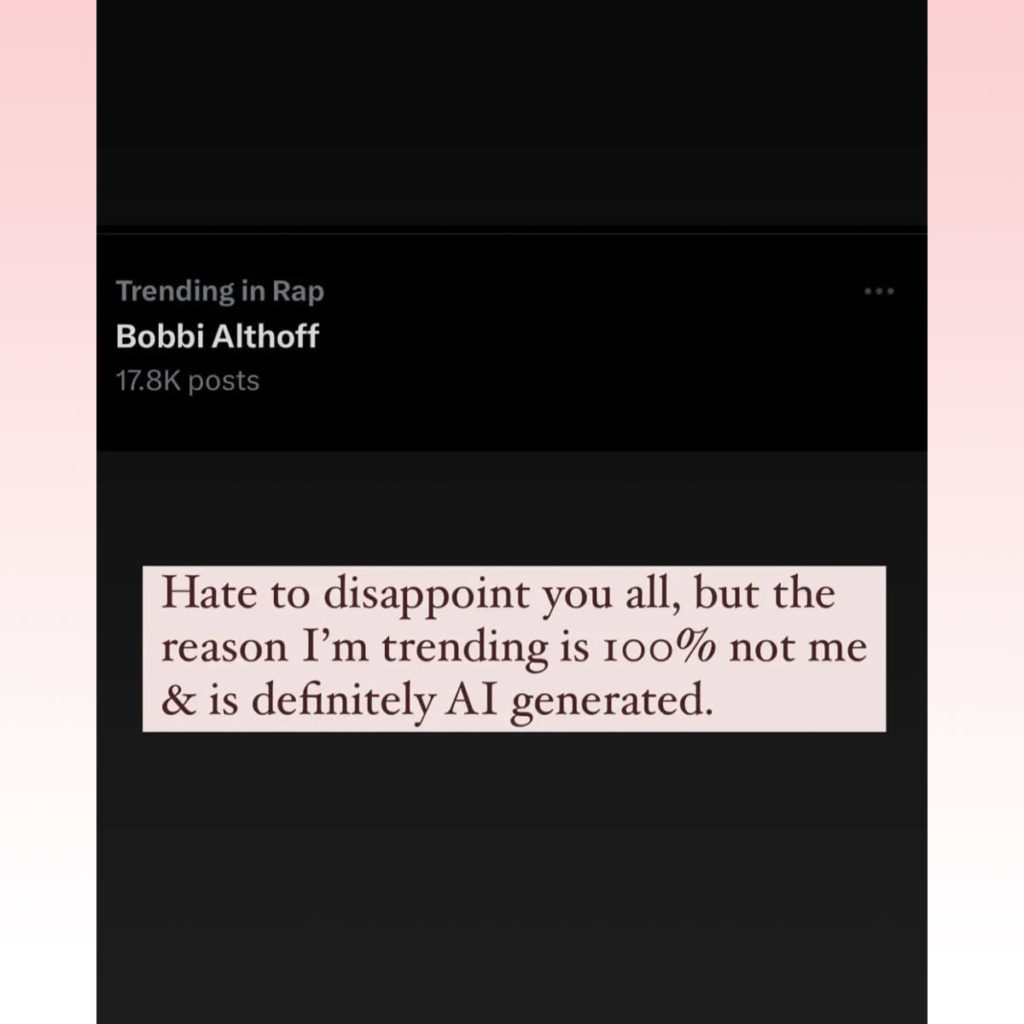

So, what exactly went on with this whole Bobbi Althoff deepfake porn situation? Well, it appears a sexually explicit video, which was entirely fake, started circulating quite quickly on X, which is a platform many people use for sharing information. This particular video, though it looked like Bobbi Althoff, was not her at all. It was, you know, a fabricated piece of media made using artificial intelligence. This kind of thing is pretty concerning, actually, because it can be so hard to tell what's real and what's not.

The information we have suggests that she "fell victim to vile seemingly AI deepfake porn images," which is a pretty strong way to put it. A specific clip showed up on X, or Twitter, that made it seem like she was doing something inappropriate on camera. But, as it turns out, this clip was completely false. It was, like, a digital fabrication, not a genuine recording of her. This really highlights the unsettling nature of these kinds of digital manipulations, you know.

Bobbi Althoff herself, when she saw this material, expressed quite a bit of shock and disbelief. She apparently said something like, "what the f*** is this, That’s not my podcast." Her reaction, honestly, tells you a lot about how distressing it must be to see your likeness used in such a way without your agreement. It's a pretty clear indication that this was not something she consented to or had any part in, which is, you know, the crux of the problem with these kinds of things.

How Do These Fake Videos Spread- The Bobbi Althoff Deepfake Porn Example

How do these fake videos, like the one involving Bobbi Althoff deepfake porn, actually get around? Well, it's pretty simple, and that's part of the trouble. Someone creates the fake content, often using readily available AI tools, and then they upload it to a social media platform or a website. From there, people can share it, and it can spread very, very quickly. Think about how fast a funny meme or a breaking news story can travel; this kind of content moves just as fast, if not faster, because it often grabs attention in a negative way, sadly.

The platform X, or Twitter, was specifically mentioned as a place where this particular fake video spread. Social media sites, by their very nature, are set up for rapid sharing. A user sees something, they might click a button to repost it, and then all their followers see it, and some of them might repost it too, and so on. This creates a sort of chain reaction, which means a single fake video can reach thousands, even millions, of screens in a very short amount of time. It's a pretty frightening thought, to be honest, how easily misinformation can take hold.

The challenge for these platforms, as the Bobbi Althoff deepfake porn case shows, is keeping up with the speed of this spread. They have rules against harmful content, but by the time they identify a fake video and try to take it down, it might have already been seen and shared countless times. This makes it a bit of a cat-and-mouse game, where the creators of fake content are always looking for new ways to get around the systems designed to stop them. It's a problem that, you know, requires a lot of attention from everyone involved.

What Are the Real-World Effects of Bobbi Althoff Deepfake Porn?

So, what actually happens to someone when their likeness is used in something like Bobbi Althoff deepfake porn? The real-world effects are, frankly, quite serious and can be deeply hurtful. For the person involved, seeing themselves in such a compromising and false situation can cause a lot of emotional distress. It's a violation of their personal image and reputation, and it can feel like a very public attack on who they are. This kind of thing can really mess with someone's head, you know, causing anxiety or feelings of helplessness.

Beyond the personal emotional toll, there's also the damage to one's public standing. Even though these videos are fake, some people might not realize that. They might see the content and believe it's real, which can lead to misunderstandings or unfair judgments. This can affect a person's career, their relationships, and how they're perceived by their fans or colleagues. It's a pretty significant blow, considering how much effort people put into building their public image. It's like, someone just came along and tried to undo all that work, basically.

The incident also sparks wider discussions in society. When something like the Bobbi Althoff deepfake porn surfaces, it makes people think about the ethics of AI and the legal aspects of creating and sharing such material. It forces us to confront questions about privacy in the digital age, and what responsibilities platforms and individuals have when it comes to stopping the spread of harmful fakes. These are, you know, pretty big questions that don't have easy answers, but they certainly become more urgent when a real person is affected in such a clear way.

Are There Any Rules About Bobbi Althoff Deepfake Porn?

Are there any rules or laws that deal with something like Bobbi Althoff deepfake porn? This is a question that many people ask, and it's, frankly, a bit complicated. Laws around deepfakes are still catching up to the technology itself. Some places have started to put rules in place that make it illegal to create or share these kinds of fake, sexually explicit videos without consent. These laws usually aim to protect individuals from having their image misused in such a harmful way. It's a pretty important step, considering how damaging these fakes can be.

However, the legal landscape varies quite a bit from one place to another. What might be illegal in one country or state might not be in another. This creates challenges when these fake videos spread across borders, which they often do. Also, proving who created the deepfake and holding them accountable can be very difficult, given the anonymous nature of much of the internet. It's like, trying to find a needle in a haystack sometimes, you know.

On top of government laws, social media platforms also have their own rules. Many platforms, including X, have policies against sharing non-consensual intimate imagery, which would include deepfake porn. They try to take down such content when it's reported. But, as mentioned before, the sheer volume of content and the speed at which it spreads makes this a constant challenge for them. It's a continuous effort, really, to keep these kinds of harmful materials off their sites, and it's not always successful right away, apparently.

What Can People Do About Deepfakes Like Bobbi Althoff Deepfake Porn?

What can people actually do when they come across deepfakes, or if they are affected by something like Bobbi Althoff deepfake porn? First off, if you see something that looks like a deepfake, especially one that's harmful or sexually explicit, it's really important not to share it. Spreading it further just makes the problem worse and causes more pain for the person involved. Instead, you should report it to the platform where you saw it. Most social media sites have ways to flag content that violates their rules, and that's, you know, the most immediate action you can take.

For individuals who find themselves victims, like Bobbi Althoff, there are steps they can take. They can contact legal professionals who specialize in digital rights or defamation. They can also reach out to the platforms directly to request the removal of the content. There are also organizations and support groups that help victims of online harassment and image abuse. It's a tough situation, but there are people and resources available to help, basically, through what can be a very distressing time.

On a broader level, it's about raising awareness. The more people understand what deepfakes are and how they're used, the better equipped everyone will be to spot them and react responsibly. This includes educating ourselves and others about media literacy, so we can all be a bit more critical of what we see online. It's about building a collective understanding, really, that not everything you see is true, especially when it comes to images and videos that seem too wild to be real. It's a pretty important skill in today's world, honestly.

Other Details from the Source Material

The provided text also included some other bits of information that, you know, might seem a little out of place given the main topic of Bobbi Althoff deepfake porn, but they were there in the source. For instance, there's mention of "Bobbi Brown cosmetics." This is a well-known makeup and skincare brand. The text says things like, "Shop makeup and skincare products on bobbi brown cosmetics online," and "Learn bobbi's latest looks, makeup tips and techniques." It also talks about "Shop bobbi brown makeup at sephora" and "Discover bobbi brown for makeup to enhance your natural beauty." There's even a line about "She made ten normal shade lipsticks which as indicated by."

This information, while interesting, seems to refer to the cosmetics brand rather than Bobbi Althoff the podcaster. It's just, like, a piece of data that was included in the material provided. It gives you a sense that the name "Bobbi" might be associated with different things in the public eye, apparently. It's worth noting that the source material also contained very explicit descriptions of what the fake "fapping material" of Bobbi Althoff supposedly showed, including things like "stripping naked, to giving blowjobs, handjobs, taking anal, sexy feet and much more." These are, you know, the specific, disturbing details of the fake content that circulated, as described in the source text.

Thinking About Digital Likeness and Bobbi Althoff Deepfake Porn

Thinking about incidents like the Bobbi Althoff deepfake porn case, it really brings up bigger questions about our digital selves. Each of us, in a way, has a digital likeness that exists online through our photos, videos, and public profiles. This likeness is, you know, part of who we are in the online world. When someone creates a deepfake, they're essentially stealing and manipulating that digital self, making it do or say things it never did. This is a pretty profound violation, actually, because it attacks a very personal part of our identity that lives in the digital space.

The speed and ease with which these fakes can be made and shared means that protecting our digital likeness is becoming more and more important. It's not just about famous people; anyone could potentially be a target. This pushes us to think about what kind of world we want to build online. Do we want a space where anyone's image can be twisted and used against them, or one where there are clear boundaries and protections? It's a pretty fundamental choice, really, that we as a society are grappling with as technology advances.

Ultimately, the Bobbi Althoff deepfake porn situation serves as a stark reminder of the challenges that come with new technologies like AI. While AI has many good uses, it also presents serious risks when misused. It calls for ongoing discussions among lawmakers, tech companies, and everyday people about how to best manage these tools and protect individuals from harm. It's a conversation that, frankly, needs to keep happening as we move forward, because the stakes are quite high for everyone's personal safety and peace of mind online.

Related Resources:

Detail Author:

- Name : Ms. Emmy Keeling PhD

- Username : kub.dora

- Email : pschmitt@yahoo.com

- Birthdate : 1981-05-08

- Address : 2809 Alvina Mall Apt. 082 Port Antwanstad, ME 55422

- Phone : +1-763-236-8239

- Company : Tillman-Veum

- Job : Occupational Health Safety Technician

- Bio : Assumenda recusandae reprehenderit eos fugiat voluptatem et. Quo asperiores et voluptate et. Voluptas occaecati nisi nihil dolores.

Socials

twitter:

- url : https://twitter.com/cobynitzsche

- username : cobynitzsche

- bio : Voluptatem laborum quas numquam harum omnis. Quos pariatur blanditiis a quas aut. Cumque autem rerum quisquam porro. Placeat amet qui quo ratione eos.

- followers : 5082

- following : 861

facebook:

- url : https://facebook.com/coby7842

- username : coby7842

- bio : Accusantium ducimus veritatis quis ea.

- followers : 6934

- following : 1715